ScrapeNinja: never handle retries and proxies in your code again

Table of Contents

I am glad to announce that ScrapeNinja scraping solution just received major update and got new features:

Retries

Retries are must have for every scraping project. Proxies fail to process your request, the target website shows captchas, and all other bad things happen every time you are trying to get HTTP response. ScrapeNinja is smart enough to detect most of failed responses and retries via another proxy until it gets good response (or it fails, when number of retries is bigger than retryNum specified per request). The default retryNum setting is 2 now.

Timeout setting

While implementing timeouts may sound obvious, this feature took a while to get it right.

There are two kinds of timeouts you might think of when scraping something: total request timeout, and timeout per each retry. Since ScrapeNinja had retries feature enabled by default, after some thinking I decided that only per-attempt timeouts make sense. So, if you specify retryNum: 3, timeout: 5 when doing your scraping request – max possible total time per request will be 3*5=15 seconds.

Residential 4g proxies in Europe

Residential 4g proxies are pretty expensive, especially if we talk about bigger providers, so I was pretty excited to finally implement "4g-eu" geo for ScrapeNinja, which means even better success rates for a lot of websites. For now this geo is only available for ULTRA and MEGA subscription plans.

Brazil, Germany, France rotating proxies

ScrapeNinja just got "BR", "FR" and "DE" geos for scraping. Even on free plans! All these geos provide high quality datacenter proxies, the ip is rotated on every request.

Custom proxy setting

This was another popular request from new customers. While having multiple proxy geos in ScrapeNinja, these are not enough for many cases when scraping requires particular country or even region. In these cases customers prefer to specify their own proxy URL per scraping API request, which is possible starting from ULTRA plan in ScrapeNinja. I am now considering adding a feature of passing multiple proxies per request so ScrapeNinja will balance through passed custom proxies using round-robin algorithm - exactly like it does when it uses stock "geo" proxy cluster. This might be especially useful for not-100% reliable proxies (and nothing is 100% reliable in this world). Let me know if this might be useful for your use case!

Retries based on custom text found in HTTP response, and on HTTP status code

This makes ScrapeNinja versatile and able to solve a lot of more advanced scraping tasks! You can specify textNotExpected: ["some-captcha-request-text", "access-denied-text"] in ScrapeNinja request and it will transparently retry via another proxy if the specified text is found in response body. You can also specify statusNotExpected: [403, 503] and ScrapeNinja will retry if the HTTP response status code matches the listed codes.

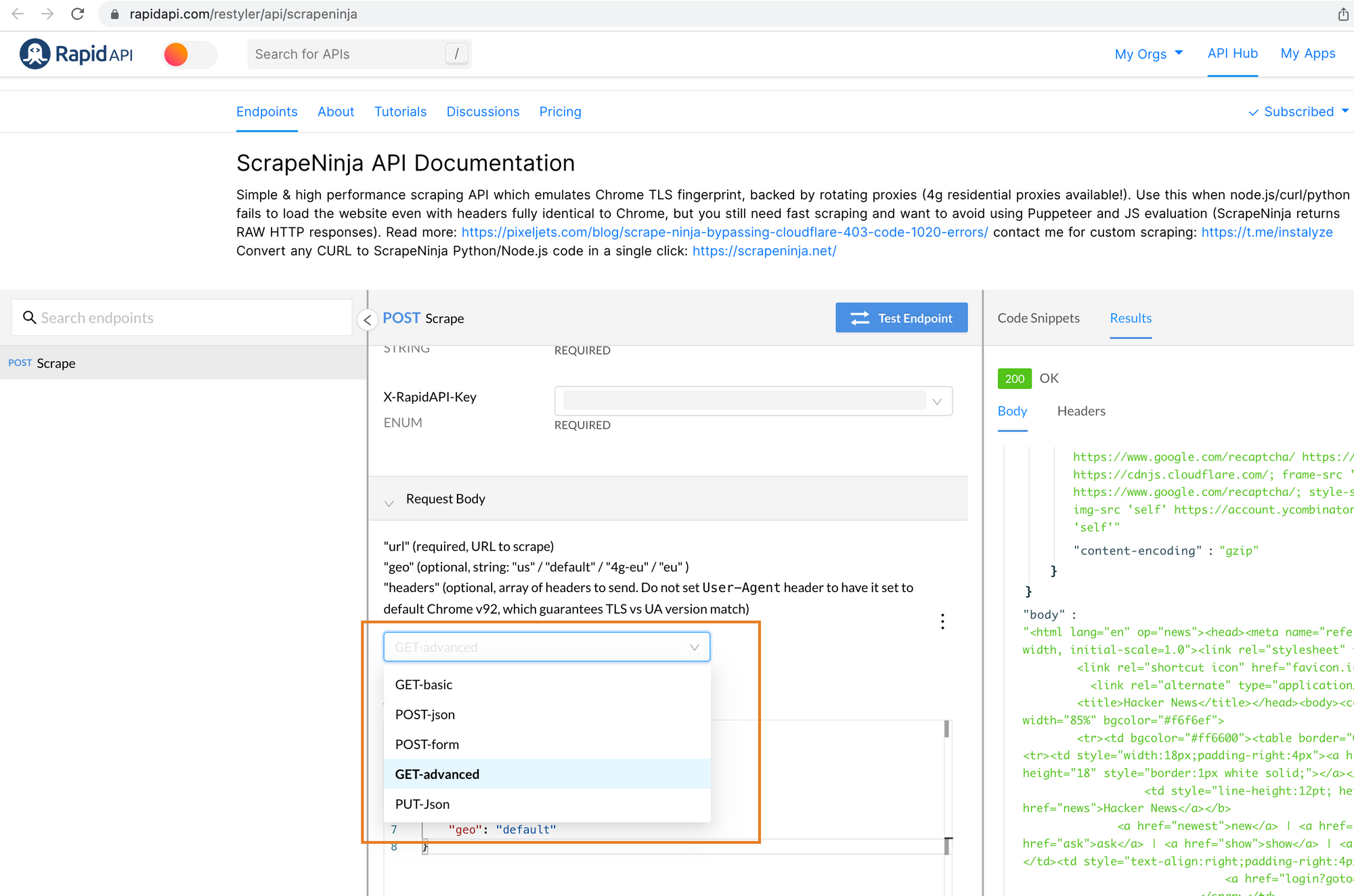

All new features are live on RapidAPI

Check the "examples" dropdown which contains a lot of sample RapidAPI payload options.

Eating own dog food

While I am really comfortable writing Node.js scraping code using node-fetch and got clients, I am now instead using ScrapeNinja under the hood for all my new scraping projects, because proper retries, response text verification, and proxy handling can not be implemented in an hour or two, there are always edge cases which will hit you in the back. The latency overhead of using ScrapeNinja vs node-fetch is so low I don't need to think twice about it, which is a huge relief from having to choose the right stack for every new small scraping task.

Read more about ScrapeNinja in the introduction writeup or proceed to ScrapeNinja API page