Self-hosted is awesome

Table of Contents

I'm a big fan of self-hosting. As an indie hacker who has launched several micro-SaaS products and as a CTO of a small company, I now prefer self-hosting all the tools I might need. With the rise of high-quality self-hosted offerings from talented teams using open source as their primary marketing channel while offering a cloud version of their product to generate revenue, we can save tens and hundreds of thousands of dollars over the lifetime of our projects, while maintaining full control over our data.

The list of self-hosted products I use

These products are awesome and have state-of-art UX, not just "it gets the job done so I can cope with its weird design" kind of feeling – like it used to be 10 years ago, when self-hosted products were often just a couple of scripts cobbled together by some software engineering student. Just a couple of wonderful examples of work-oriented self-hosted products I use:

- Metabase (online dashboard to build SQL reports, we use it at Qwintry);

- Mattermost (self-hosted Slack alternative);

- Directus (turns your SQL database into CMS)

- n8n (developer-friendly Zapier)

- LibreChat (ChatGPT interface for all popular LLMs)

- Mautic (Marketing newsletters)

Oh, and there are also Supabase... and ELK stack... and Zabbix... (read more about our poor-mans SRE setup)

This is a great self-hosted subreddit I recommend. I should note, that a lot of products being discussed there on Reddit are mostly for personal use (like, torrents, etc), but I am mostly seeking for solutions to do my work.

I believe self-hosting has several advantages for indie hackers and developers like myself. When you self-host, you have complete control over your infrastructure and data. This level of control is crucial for me as a developer because I need the flexibility to customize and integrate various tools seamlessly into my workflow.

The Cons

That being said, I think it's important to acknowledge that self-hosting comes with its own set of challenges. Maintenance, security, and scalability become my team responsibility. Ensuring regular updates, implemeting proper security measures, and managing infrastructure can be time-consuming tasks. However, if you have the technical skills and are willing to put in the effort, I believe self-hosting provides a level of independence and control that can be highly beneficial in the long run - it certainly is in our case.

The big question is: how technical do you need to be to embrace self-hosted products? There are no any silver bullets here, and you need to know a lot about how computers work, but I don't think you need to have 5 years of web development or system administration background to self-host effectively and reliably anymore – life got easier!

Docker skills are essential

Luckily, Docker and Docker Compose have greatly simplified the process of self-hosting. I have to admit, as a developer and CTO, in 2014-2018, I was initially hesitant to use Docker for our own web projects (especially the ones in the active development phase). It significantly complicated our deployment workflow and added a lot of overhead while providing marginal value. I didn't fully buy into the concept of 100% reproducible builds, especially considering the increased DevOps complexity that came with it.

Back then, I loved the ability to quickly hack and debug PHP scripts directly on production. While it's definitely a bad practice, there were countless times when this approach proved invaluable while debugging hard-to-reproduce bugs in a live environment: crucial for a small, rapidly changing product with just 1-3 developers!

Moreover, I struggled to wrap my head around Docker's concepts, such as volumes and binds. The cryptic shorthand syntax made it even more challenging to understand how these components worked together. I found myself spending more time trying to decipher Docker's intricacies than focusing on our application's core functionality.

At the time, our team had a well-established workflow that allowed us to develop, test, and deploy our applications efficiently (AND FAST!). Introducing Docker felt like an unnecessary layer of complexity that would disrupt our processes without providing significant benefits in return.

However, our perspective eventually changed and has shifted towards Docker everywhere (with the exception of some development machines): our projects matured and required more predictability; we strived for better CI/CD, dedicated teams of testers, better DevOps practices, and improved horizontal scalability. What's equally important is that Docker matured as well and became a great, boring technology, and a de-facto standard to host everything, starting from API services and ending with databases.

And Docker is what makes running complex software, self-hosted, so enjoyable. These applications, developed by external teams using varying technical stacks, now always come with well-conditioned Docker Compose files (official or community-supported) that make the setup process a breeze. Instead of dealing with the hassle of manual installation and configuration, I simply use Docker's containerization approach to spin up these applications quickly and easily.

You can still be a good web developer without knowing Docker – but it's hard to enjoy self-hosting nowadays without knowing it!

GPT and Claude to the rescue

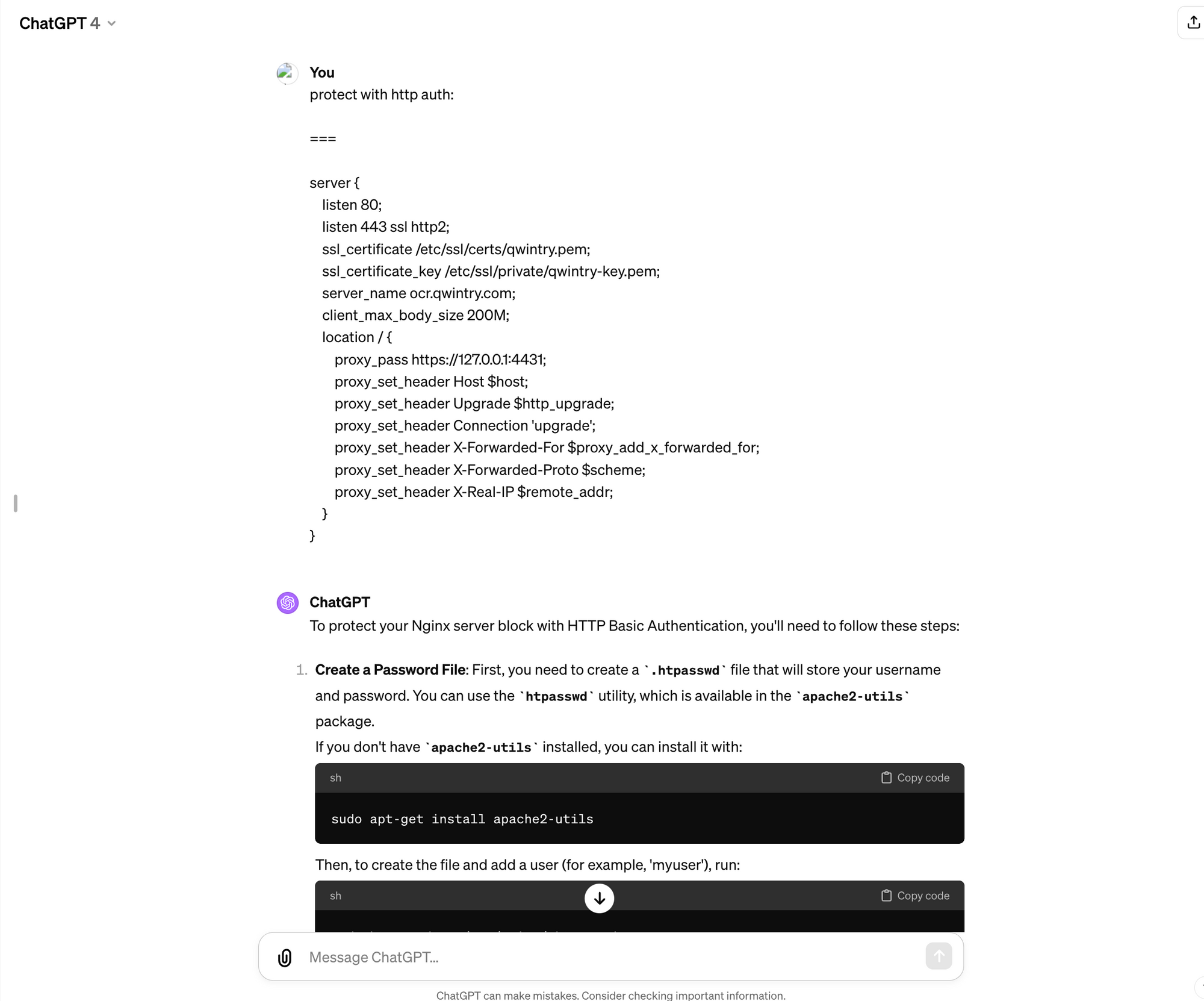

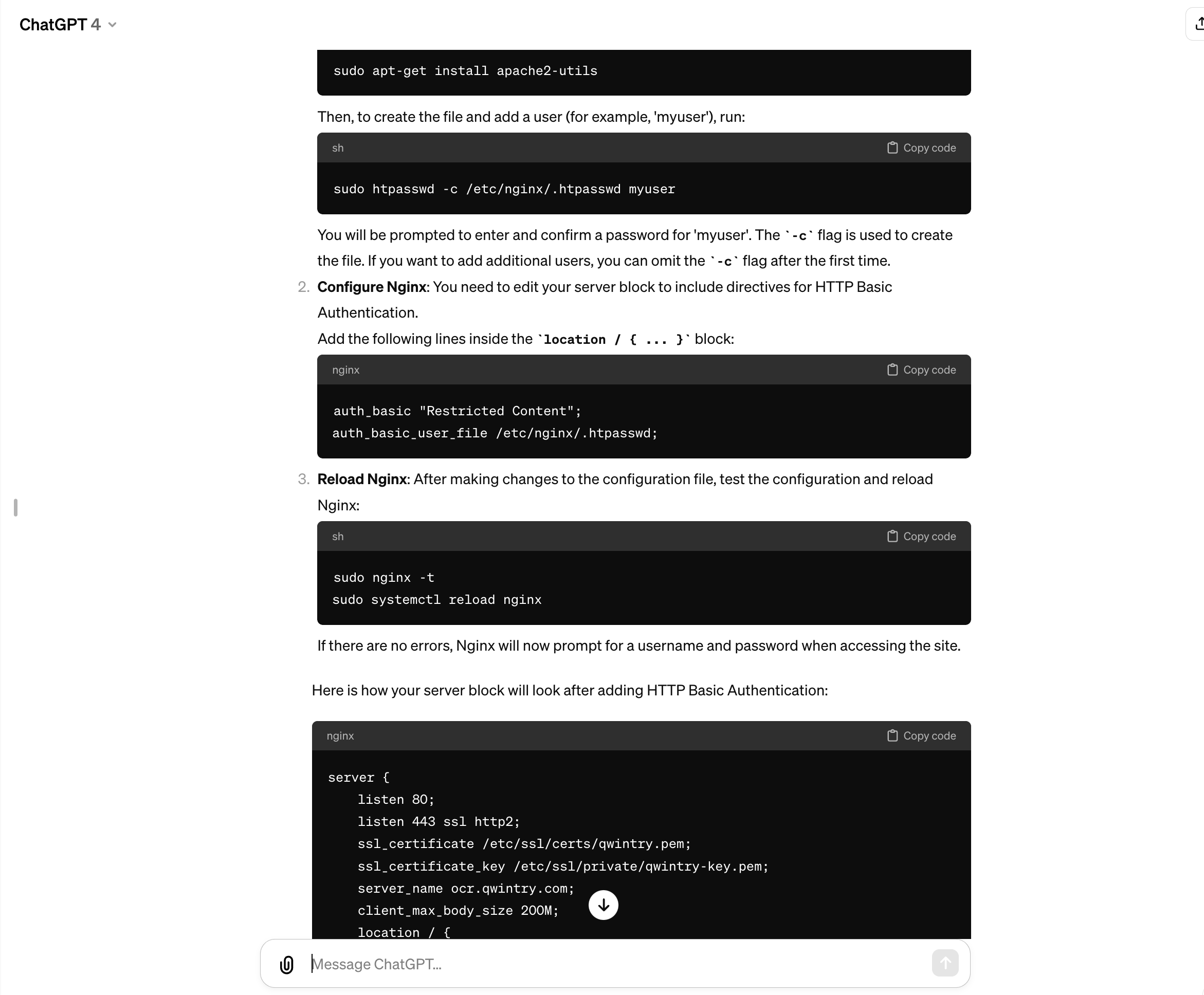

Besides Docker, to self-host effectively, you will also need good understanding of:

- how Nginx routing works (well, you can use another webserver as an ingress controller, I just prefer Nginx).

- how Linux and networking in general works (firewall, ufw)

- how DNS and/or Cloudflare DNS works

Great news: you don't need to spend months experimenting with these - there is a great shortcut nowadays, with the raise of LLMs, it can be done in a matter of hours! (and it can help with docker errors, too)

Funding self-hosted product development

Developing high-quality, free, and open-source products requires funding. A common approach is to offer a cloud version of the product with some enterprise features to generate revenue. The Directus project had an interesting discussion on GitHub (https://github.com/directus/directus/discussions/17977) where the founder shared ideas on sustaining their product. They eventually adopted a strategy allowing free use of the entire platform unless the legal entity exceeds $5,000,000 USD in annual "total finances." - this is an interesting approach!

Open-core concerns

Not all self-hosted products are created equal. Some cross the line, offering a barely usable free version as a marketing gimmick instead of a genuinely useful product. In my opinion, an acceptable practice is to restrict clearly enterprise features, such as SSO mechanisms (like in n8n), under paid licenses. However, restricting the free product to the point of being nearly unusable is inadequate.

One example of a self-hosted product that faced community backlash is Budibase. They recently restricted their open-source version to a limited number of users, which led to criticism on Reddit (https://www.reddit.com/r/selfhosted/comments/17v48t8/budibase_will_soon_limit_users_on_oss_self_hosted/). Some users blamed this decision on the fact that Budibase is now VC-funded, suggesting that the pursuit of profitability led to the limitation of their free offering.

This incident highlights the balance that open-source projects must strike when seeking to generate revenue while maintaining the trust and support of their community. Restricting the free version too heavily can lead to a loss of goodwill and a perception that the project has abandoned its open-source roots in favor of commercial interests.

My real approach to self-host a product

Let's say I want to host n8n. These are my steps:

Basic steps for a demo

- Log in via SSH to my Ubuntu running on a remote Hetzner cloud server (I don't ever host anything in Docker on my Macbook, I do everything on Hetzner via plain SSH and/or awesome VS Code Remote extension, in case I need heavy config edits)

- Verify docker is available and is running on the server:

docker ps - Google

n8n dockerand open docs: https://docs.n8n.io/hosting/installation/docker/#prerequisites - Run these two commands highlighted in the manual to run the service

- Verify docker containers of n8n is running. If not, explore container errors via

docker logs xxxand check n8n github repository for similar issues: chances are someone already struggled with this! - Port forward the service to my localhost so I can open the service in my Chrome via

http://localhost:3333

These steps generally take around 3-5 minutes max to get the service running. Then, I usually play with the service for an hour or two to realize if I want to use it on a longterm basis.

- If I do, go to Cloudflare to my domain and create a subdomain on one of my domains, with an

A recordpointing to my Hetzner cloud server (e.g.n8n.scrapeninja.net. HTTPS certificates are handled by Cloudflare, has good DNS UI, and also protects my server ip addresses for occasional DDoS attacks (and it's free!) - I create a nginx host and point it to the docker container. Here is an example of such a config:

server {

listen 80;

server_name n8n.scrapeninja.net;

return 301 https://$host$request_uri;

}

server {

listen 443 ssl;

server_name n8n1.scrapeninja.net;

ssl_certificate /etc/ssl/certs/ssl-cert-snakeoil.pem;

ssl_certificate_key /etc/ssl/private/ssl-cert-snakeoil.key;

location / {

proxy_pass http://localhost:5678;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade; # websocket support

proxy_set_header Connection "Upgrade"; # websocket support

proxy_set_header Host $host;

chunked_transfer_encoding off;

proxy_buffering off;

proxy_cache off;

}

}